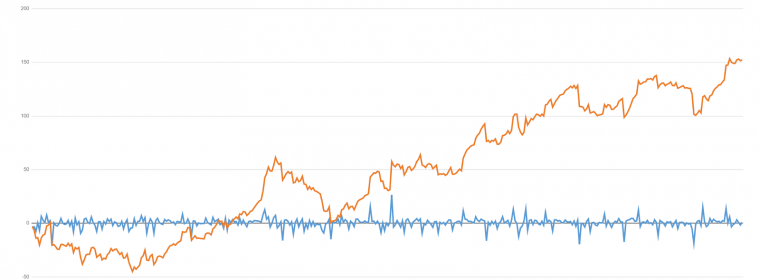

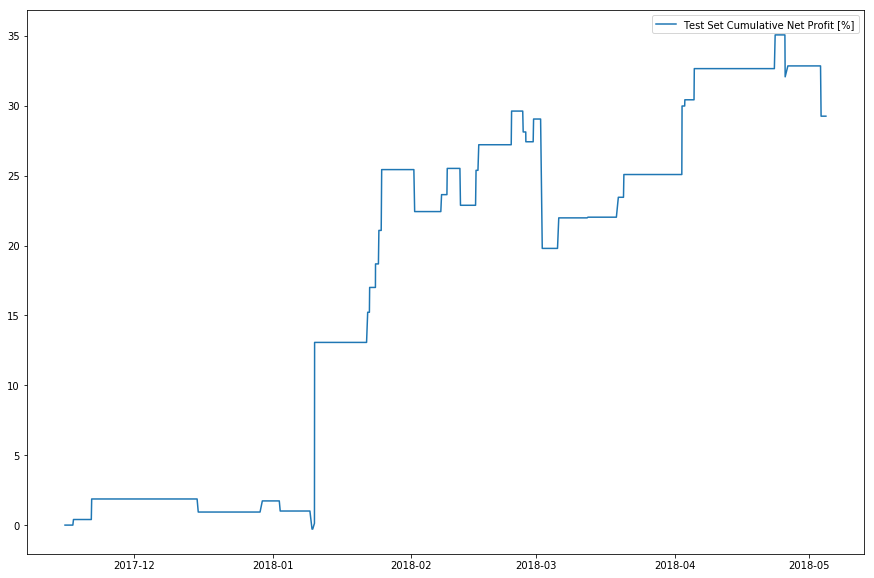

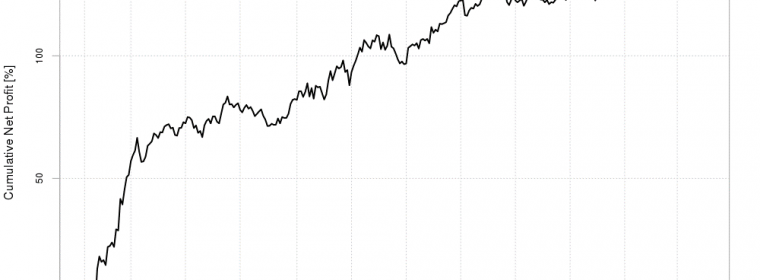

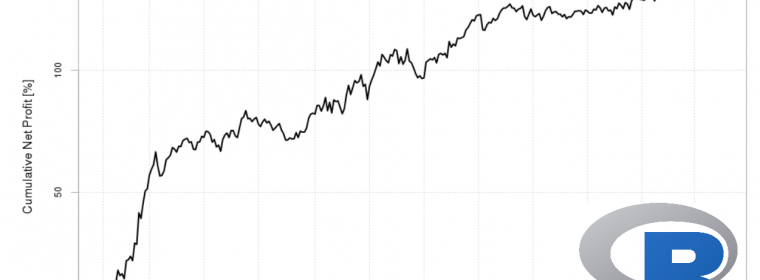

In this day and age, it has become more and more apparent that data is scaling and changing beyond the ability of human-powered machine learning to make the most of it. This makes enhancing current analyses with deep learning all the more necessary. Our team invested heavily in developing a trading model that could continue […] continue reading »

Enhancing Trading Models with AI