The demand for more and more analysis of IoT data has been growing exponentially with the explosion of connected devices. Unfortunately the cost and time associated to analyze this data has also grown exponentially as data volumes keep getting larger and larger.

An enterprise client, who has been collecting data from millions of devices, has been wrestling with the growing pain of having to analyse it as the volume of data is exceeding the capacity of their conventional data processing systems.

Their pain is not only related to the size of data but from a lack of agility. The requirements for their analysis changes rapidly and conventional systems simply could not adapt to such changes in a timely or economical manner.

Their latest analysis requirement was to process 50 billion records of GPS data for various different analytic specifications and identify particular behavioral patterns for their customers’ interests. That kind of dynamic requirement made conventional batch data processing very difficult and expensive.

They have researched numerous products from different vendors and chosen AuriQ’s Pivotbillions, a massively parallel, in-memory data processing and analysis solution. AuriQ Pivotbillions enabled the client to analyze their entire 50 billion records in real-time. That meant that analytic queries, including ad-hoc queries, against the entire data set could be processed in seconds or tens of seconds which allowed data analysts to test their hypotheses very efficiently.

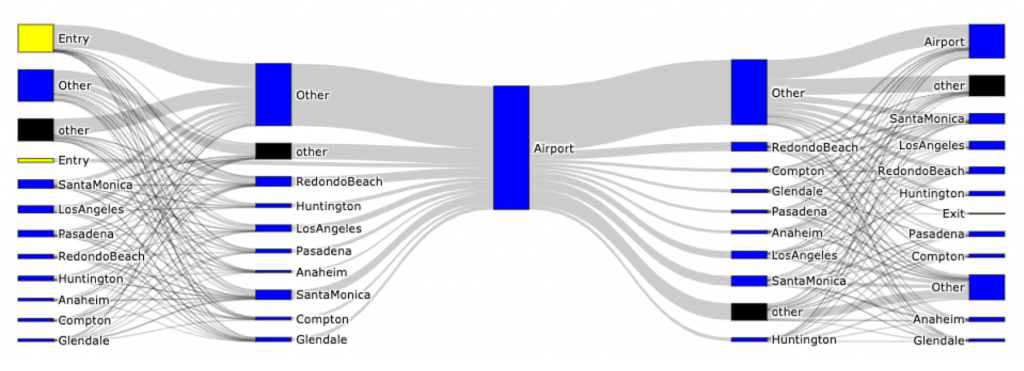

Fig1: An example of a visualization of the analyzed 50 billion records of GPS data, showing how devices move before and after the airport.

Because Pivotbillions is a software solution that can run on Amazon Web Services, it did not require any special hardware. Excel-like user interface of Pivotbillions allows analysts to work on data immediately without any coding or learning.

The total system cost to analyze their 50 billions records utilizing PivotBillions on AWS was easily less than 1/10 of conventional systems. It tooks only a few weeks to complete analysis and visualization tasks for various different requirements.

Facts

- Records: 50 billions records from few millions devices

- Size: 6 TB in 365 compressed files

- Repository: AWS S3

- Instances: AWS EC2 m5.large (up to 500 concurrent EC2)

- Time to preprocess and load : 30 minutes (from original data in S3)

- Conventional system: took few days to load a partial sampled data into database

- Response time of queries on whole 50 billions records: few ~ few tens of seconds

- Conventional system: took few hours to days to process a query to partial sampled data.

- Performance varies slightly depending on conditions of AWS.

Trial versions of the PivotBillions service is available for free. Click here to request a demo or sign up for a free account.